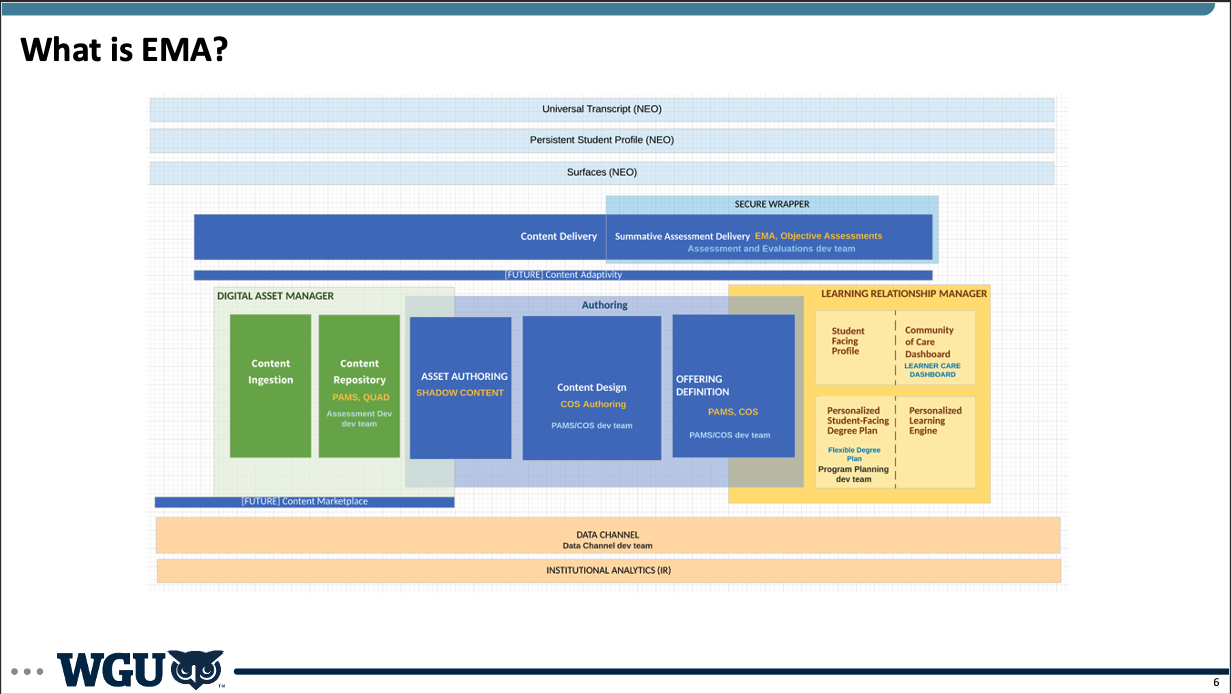

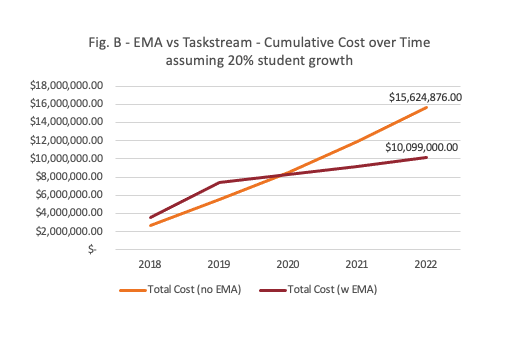

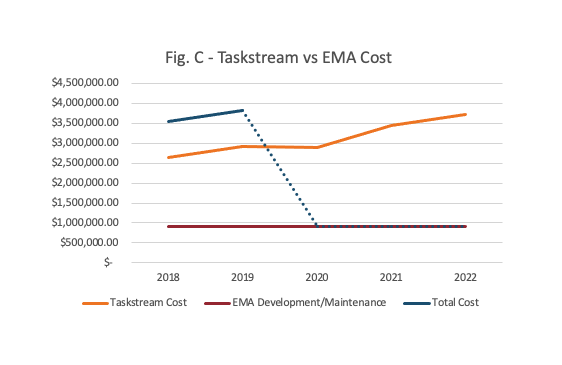

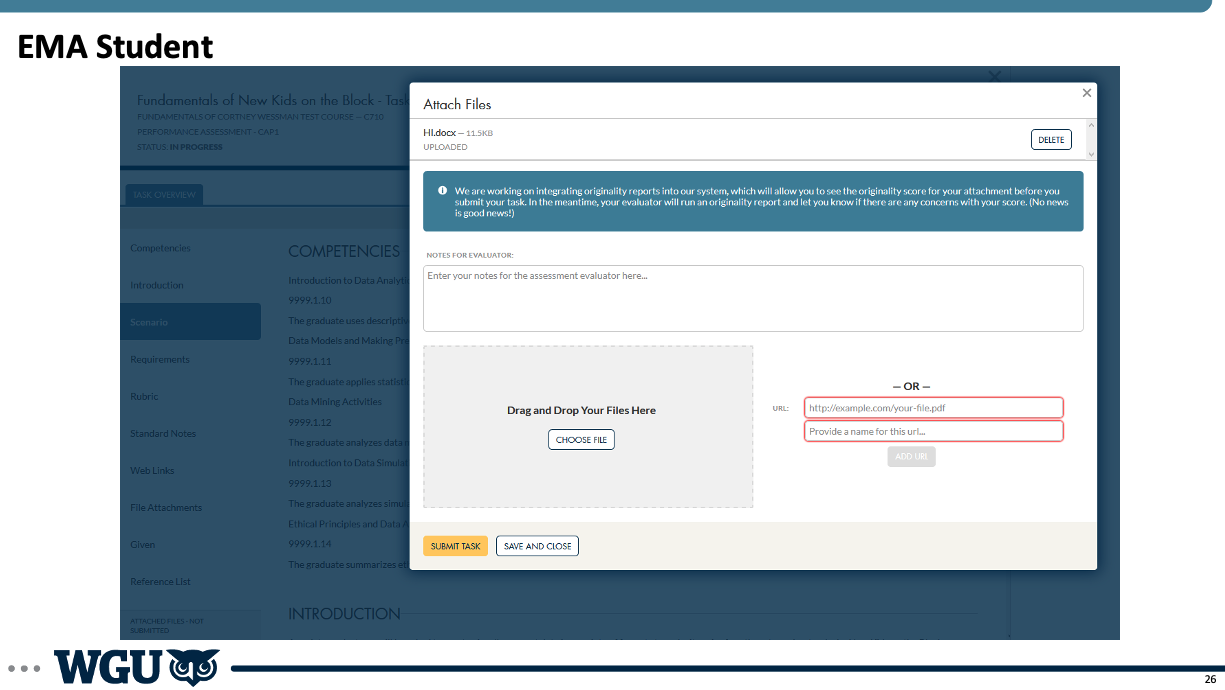

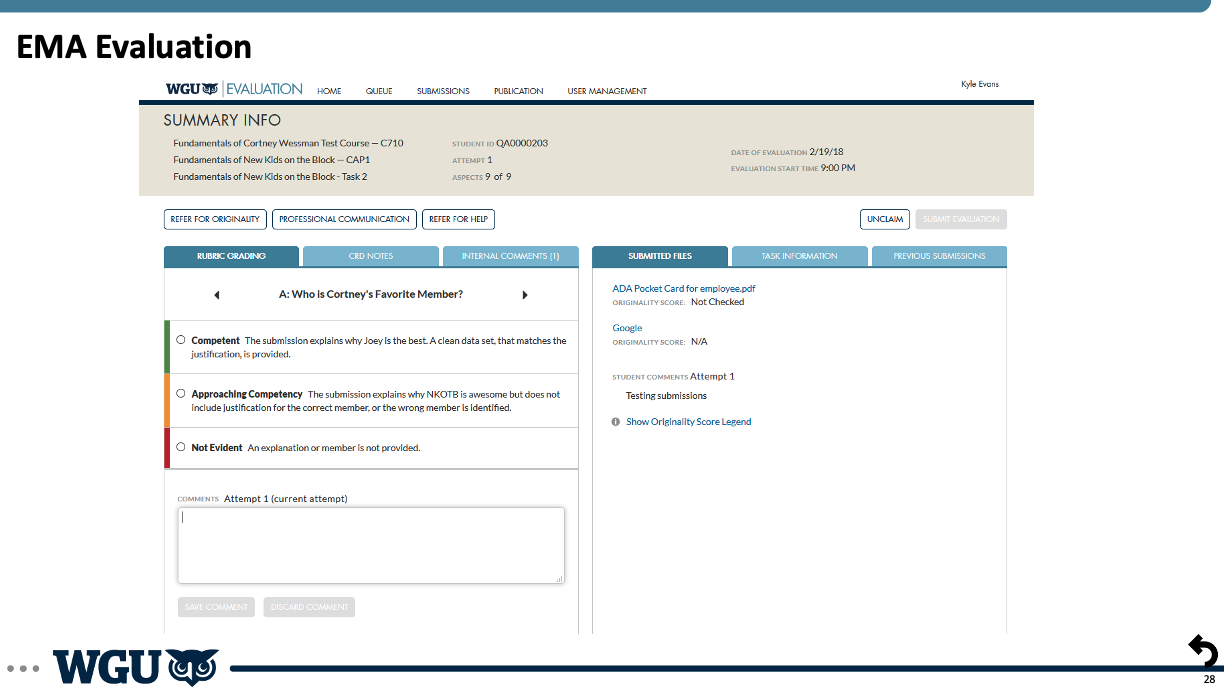

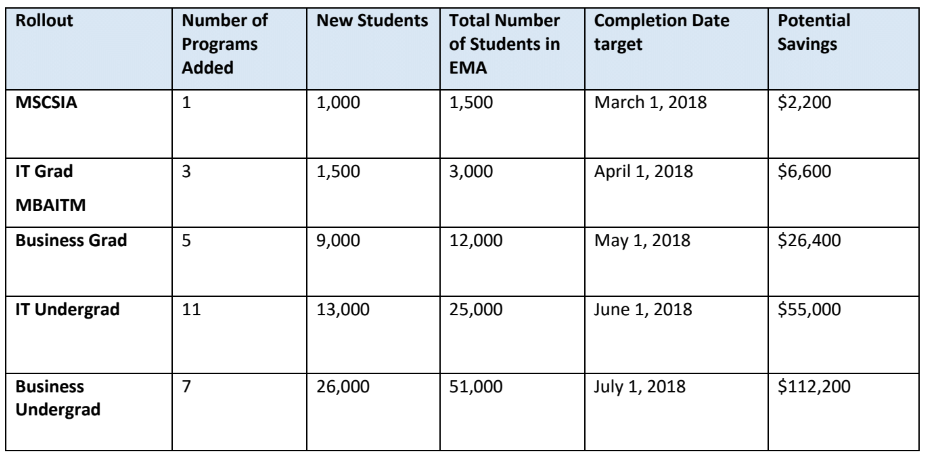

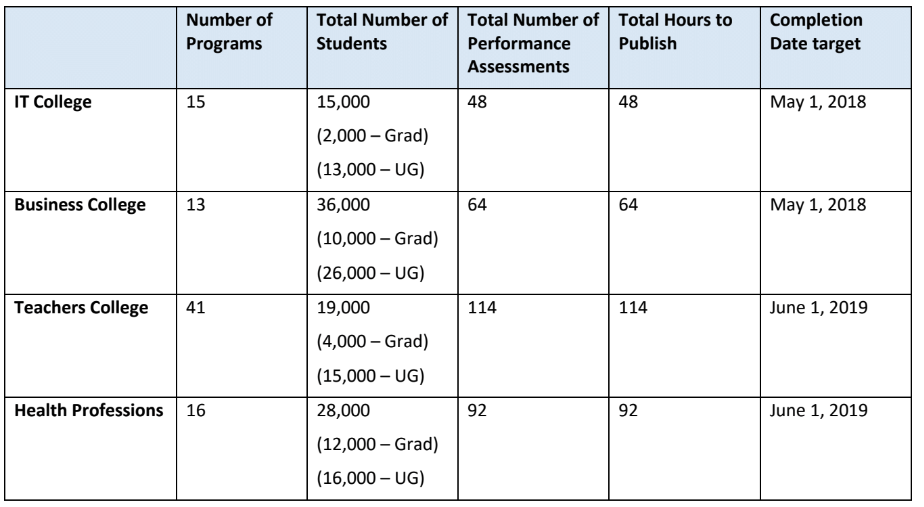

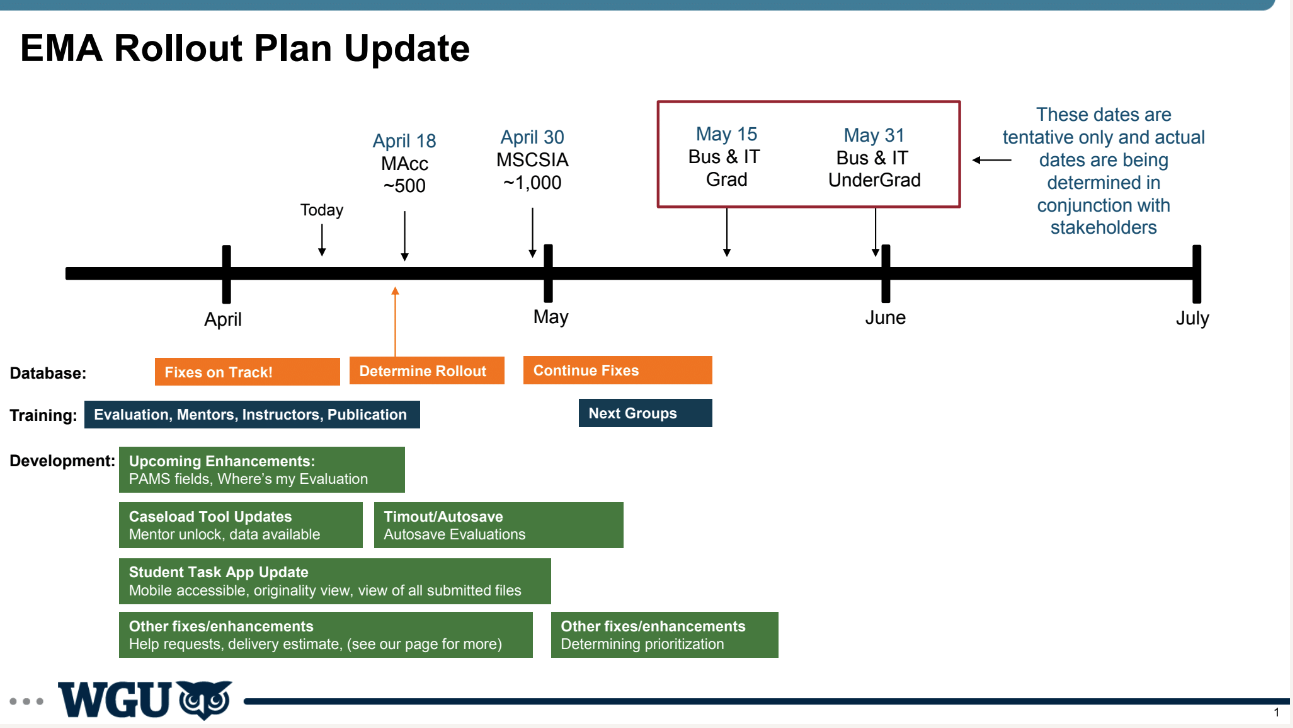

BackgroundFor years as Western Governors University grew, it outsourced much of the technology it used to third parties. This made sense initially as it focused on education. But it became clear to the university that in order to scale, it would need to bring in-house the technology. Not only to better serve its students, but because it was a different education model. The technology of higher education no longer served its needs, and it wanted to not only create software it could use itself, but could offer to other institutions who wanted to offer competency-based learning. With that in mind, WGU built out a product management organization, which I was among the first to come into and had the opportunity to help build and shape over my years there. In addition to building the product organization, I also had the opportunity to build out one of the key areas of the university: the assessment and evaluation software. For competency-based education, this is one of the most critical elements since testing knowledge is at the heart of competency. I led several teams within the Evaluation Department, and had the opportunity to imagine, design, and build out from the beginning one of the key pieces of technology for evaluation management. We affectionately called it EMA, or the Evaluation Management Application. And while the experience for users , especially students, was designed to be seamless, EMA was a massive effort that was a flagship product for WGU in many ways. EMAEMA was three applications in one. First, it was an assessment creation and publishing application. In order to have assessments available on the platform, we needed to create the ability for users, in this case faculty at WGU, to create and publish assessments to EMA. This was no small task though, since we had an entire department dedicated to developing assessments. So I had to work very closely with several teams to ensure we were creating the right tools to allow not only publishing, but different workflows for creating assessments. We started out with very basic functionality as we began, and then grew and added layers to the capabilities that EMA offered to faculty. Second, we had the student-facing portion of the application. This was fully integrated into the student portal and was seamless for students so they didn't have to go anywhere outside of WGU to find or submit their tests. This was one of the most important parts of the application early on because we wanted the student experience to be incredibly simple and intuitive from the start, even if we had to do a lot in the background to make that happen. Finally, we had the evaluation portion of the platform. This is where evaluators (a separate group of faculty) would come in to evaluate submitted exams, grade them, and send them back to students. Evaluation faculty was one of the largest faculty groups at WGU, and ensuring that we maximized their time was critical. When we began developing EMA, we started testing with just one exam, one class with 13 students, and a single evaluator. It was a good thing too, because we had to do a significant amount of work "behind the curtain" to make everything work and to learn, but with this learning we grew significantly over the subsequent months. BenefitsThere were numerous benefits to the development of EMA. We knew early on that no other solutions on the market were built for competency-based education. So we knew that building a platform specifically for this purpose would benefit our university, our faculty, our students, and the industry. We also knew if we wanted to scale both as a university and help scale competency-based education, we needed to do this. We couldn't continue to linearly pay increasing costs as we grew. This was one of the key pieces of analysis I did early on. Because we had many stakeholders who were very skeptical of the need to create development teams, dedicate significant resources, and ultimately own internally all of this technology. Finance was chief among the skeptics. So I worked on initial analysis and then ongoing updates to show our progress. DevelopmentWe developed EMA iteratively, as I mentioned before. We started out with a few students, a single evaluator, and manually doing much of the work behind the scenes to test out a lot of the processes and to make it work without doing too much development too early. We then got more colleges involved, more departments, more students, and more evaluators. And the complexity scaled significantly as we grew. I could dive into many stories of lessons learned and issues we had to overcome, but I'll touch on just a few here. Early on, we made the decision to build using a Cassandra database. Cassandra is a non-relational database. It is good for many things, and good if you have the expertise to maintain it. This was an architectural decision we made based on the expertise we had at the university, but turned out to be a mistake. A non-relational database was not right for our purposes, and our Cassandra expert soon left, leaving us without the necessary expertise to properly maintain the database. As we grew and scaled, we began to notice performance issues. As records were being deleted, it left a "tombstone". We didn't realize that we needed to clean up these tombstones in the database, which led to further problems. We also were part of a broader organizational effort to migrate to AWS. Given our lack of team expertise in non-relational databases, the need to move to AWS, the desire to better manage our application and database with a relational database, we decided that before we fully scaled up, we should move from Cassandra to Aurora on AWS. This meant a re-write of most of our application and a full migration. We had to pause all of our feature development work. I led the team on discovery to flesh out the details and estimate the scope of work, as well as worked with stakeholders to help everyone understand the need for the architectural changes and the benefits once they were done. We estimated, created the plan for work, and began. As with all projects, it took longer than our optimistic plan, but aligned with our conservative plan (or maybe with Hofstadter's Law), but we completed the move to AWS and our move to an Aurora database. Additionally, we created a seamless user experience for faculty and students. Students no longer had to submit their exams through an outside portal or vendor, but could access everything within the WGU student portal. For faculty, we created a more seamless interface that worked the way that they worked. Rather than forcing them to conform to an application, we created an application and platform that worked with them, helping them save time and mental energy, getting them through their work more quickly. LaunchThe rollout and launch of EMA to all of WGU was a multi-phased process. It involved working with every department at the university in some way, given that it touched everyone at some point. I initially had to determine which courses and programs made the most sense to focus on initially. And from there, determine how to get those assessments, students and faculty onto our platform. This involved incredible buy-in from across the university, especially as the number of courses, students, and faculty grew. I faced competing pressures as we built and launched. There was the pressure to move quickly, get our new platform out, and start to realize the savings and the UX benefits. But we also had to balance that against the changes we were asking everyone to make, especially to longstanding processes that were not easy to adjust. While we love to move quickly in technology, that isn't the case in other disciplines or departments, and we have to be conscious of that. I had to work closely with many groups, and we made lots of adjustments to our timelines and rollouts as we went. But we did hit our key goals of launching to the whole university on schedule, and eventually getting other universities onto the platform as well.

0 Comments

|

AuthorMy personal musings on a variety of topics. Categories

All

Archives

January 2023

|

RSS Feed

RSS Feed